|

|

|

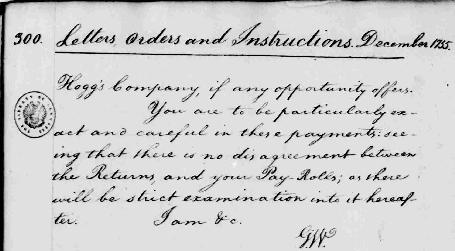

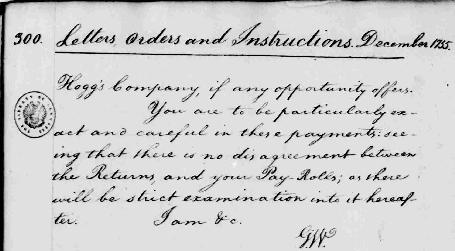

Example page from the George Washington collection.

|

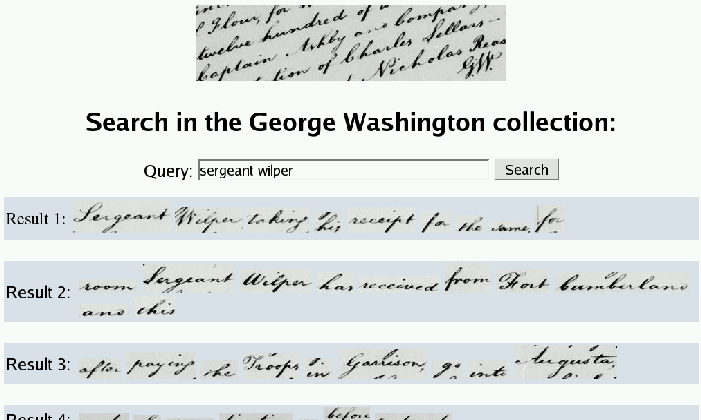

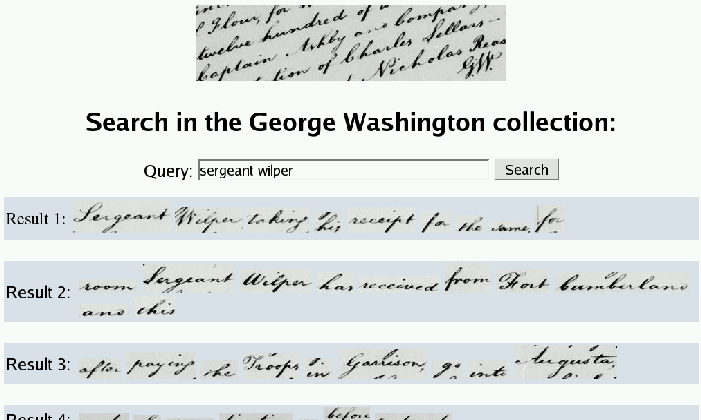

Screenshot of the line retrieval demo system.

|

|

|

|

Example page from the George Washington collection.

|

Screenshot of the line retrieval demo system.

|

Line retrieval: small collection of 20

pages total. This collection was automatically segmented into

words. A manually corrected version of this dataset with

high-quality segmentation information can be found on the CIIR

download page

(click on Word Image Data Sets).

Line retrieval: small collection of 20

pages total. This collection was automatically segmented into

words. A manually corrected version of this dataset with

high-quality segmentation information can be found on the CIIR

download page

(click on Word Image Data Sets). Try me first!

Page retrieval using probabilistic

annotation: large collection of 1000 pages, fast query response.

Try me first!

Page retrieval using probabilistic

annotation: large collection of 1000 pages, fast query response.

Page retrieval using Kullback-Leibler

scoring (will be available later): 1000 pages; the collection is

searched in realtime, resulting in a query response time of about 50

seconds.

Page retrieval using Kullback-Leibler

scoring (will be available later): 1000 pages; the collection is

searched in realtime, resulting in a query response time of about 50

seconds.

|