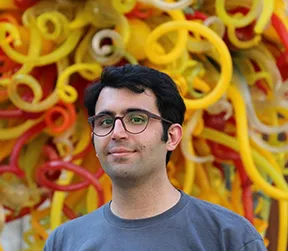

Speaker: Mostafa Dehghani, Google Brain

Title: Universal Models for Language and Beyond

Date: Friday, September 16, 2022 - 1:30 - 2:30 PM EDT (North American Daylight Saving Time) via Zoom. On campus attendees will gather in CS 151 to view the presentation.

Zoom Access: Zoom Link and reach out to Hamed Zamani for the passcode.

Abstract: This talk makes the case for universal models in language as well as potential ways to go beyond text modality - an emergent and compelling research direction. Universal models are models that dispense with task specific niches altogether. I discuss the recent emerging trend of unification with respect to model, task, and input representation and how these research areas come together to push forward the vision of truly universal language models. Specifically, I discuss several efforts that we are working on such as Unifying Language Learning Paradigms (UL2 model), Unifying Retrieval and Search with NLP (Differentiable Search Indexes) and Multimodal Unifications (ViT, PolyViT, etc.), and finally how scaling up is a safe bet toward such a goal.

Bio: Mostafa Dehghani is a research scientist at Google Brain. He has been working on scaling neural networks for language, vision, and robotics. Besides large scale models, he works on improving the allocation of compute in neural networks, in particular Transformers, via adaptive and conditional computation. Mostafa obtained his Ph.D. from the University of Amsterdam where he worked on training neural networks with imperfect supervision.